1. Test design cycle

Test design is a complex process that involves many steps which should be repeated again and again to ensure test quality. Although ‘writing items and tasks’ is represented as one step only, item writing is in fact a continuous process that starts with developing item specifications and continues after the first version of an item has been written, to include item review, revision, and trialling.

The dictionary of language testing defines item writing as “the stage of test development in which test items are produced, according to a set of test specifications” (Davies et al., 1999, p.99). Green (2014) gives a similar definition: item writing is “turning specifications into working assessments” (p.43). Both definitions stress the importance of specifications in writing items – as a rule, items should be produced based on a set of specifications.

People who produce test items are usually called item writers. Item writers who work for large-scale testing organisations are often freelancers. They are often (but not always) trained by those organisations on how to write items, and their item-writing skills develop as they write more and more items.

For classroom teachers, writing test tasks and items is a part of their daily job routine. In many cases, they are not trained in how to do this, although nowadays resources are becoming available to support teachers in producing test items.

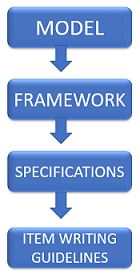

2. Item-writing documentation

The definitions of item writing provided by Davies et al. (1999) and Green (2014) suggest that any item writing should happen based on existing documentation. The documentation normally includes models, test frameworks, specifications and sometimes also item writing guidelines.

Models are the most general documents “providing a theoretical overview of what we understand by what it means to know and use a language” (Fulcher & Davidson, 2009, p.126). One type are models of communicative competence that describe what knowledge and skills a competent user of a language must have. Some of the most influential models of communicative competence are the ones proposed by Canale and Swain (1980) and by Bachman (1990). There are also performance models that describe what a competent user of a language should be able to do in that language. One example of a performance model is the Common European Framework of Reference (CEFR).

A test framework document states the purpose of a particular test and the test construct. The construct is normally selected from models. In other words, a test is an operationalisation of a particular theory of language its designers subscribe to.

Construct – what the test is intended to measure

Test specifications are at the next layer of test documentation. They are “the design documents that show us how to construct a test” (Fulcher, 2010, p.127). They are also the documents that item writers refer to whilst creating items and tasks.

Some testing organisations also create item writing guidelines based on their test specifications. Such guidelines then become the primary documents item writers work with. Item writing guidelines normally include a level of practical detail unnecessary for test specifications for other stakeholders but essential for writing particular items.

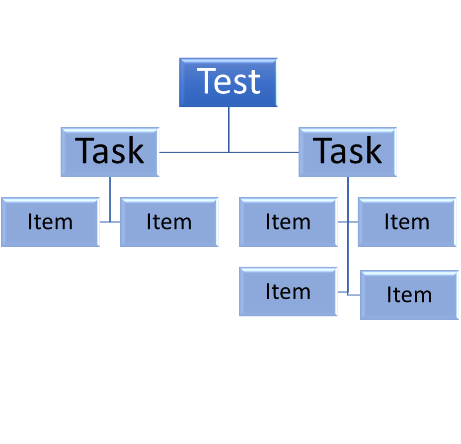

3. Tests, tasks, and items

A test is a series of tasks used to measure a test-taker’s ability (knowledge, skills).

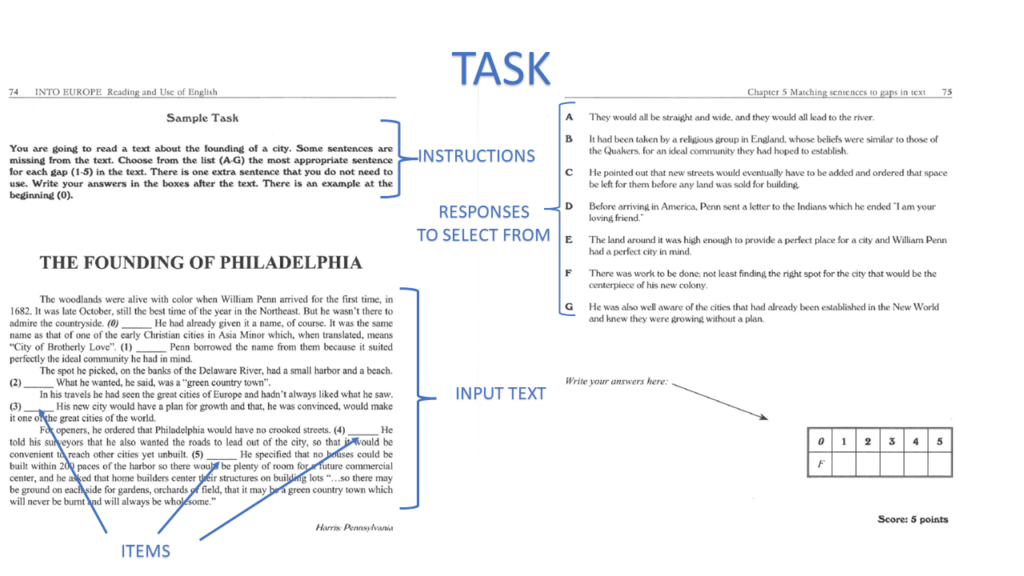

A task is “what a test-taker has to do during part of a test” (Davies et al, 1999, p.196). A task normally includes instructions, input, and one or more items.

An item is “part of a test which requires a specified form of response from the test taker” (Davies et al., 1999, p.201).

4. Types of tasks / items

Tasks and items can be of two types, depending on what kind of response is required from test-takers.

Selected-response items, as the name suggests, require test-takers to select the correct response from the options provided to them. Examples of such items are multiple-choice questions, true-false, or multiple matching.

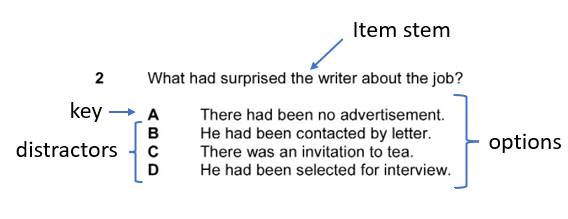

A multiple-choice item normally consists of a stem (question) and several options, often three or four. The correct option is called the key. All other options are called distractors because they aim is to distract test-takers from the correct answer.

Constructed-response items and tasks require test-takers to construct their own response. The response can be short, just a word or several words (e.g., close tasks, short-answer questions) or quite long (e.g., an essay, a letter, oral interview responses).

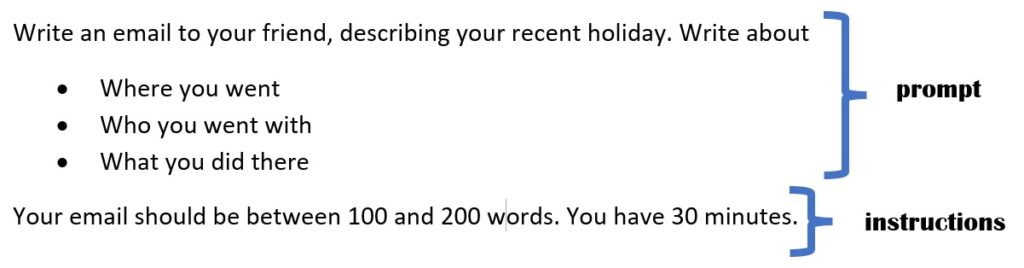

The writing task above includes instructions and a prompt. The instructions set out how test-takers must respond to the prompt (e.g. the response length and the timing). The prompt provides some input information and specifies what test-takers must write about.

References

Bachman, L. F. (1990). Fundamental considerations in language testing. Oxford University Press.

Canale, M., & Swain, M. (1980). Theoretical bases of communicative approaches to second language teaching and testing. Applied Linguistics, 1(1), 1-12.

Davies, A., Brown, A., Elder, C., Hill, K., Lumley, T., & McNamara, T. (1999). Dictionary of language testing. Cambridge University Press.

Fulcher, G., & Davidson, F. (2009). Test architecture, test retrofit. Language Testing, 26(1), 123-144.

Fulcher, G. (2010). Practical language testing. Hodder Education.

Green, A. (2014). Exploring language assessment and testing: Language in action. Routledge.